Twitter Launches Improved Warning Prompts to Reduce Toxicity on the Platform

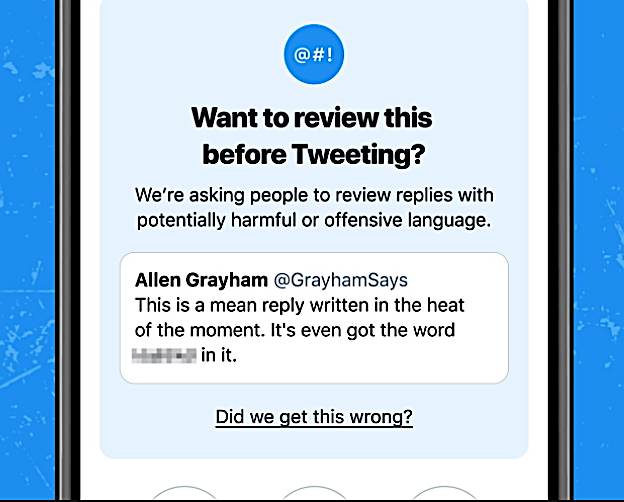

Twitter has launched an updated version of their warning prompts, including an improved detection algorithm that avoids misidentification.

The algorithm will more accurately identify harmful and offensive language, including profanity. It will also be able to gauge nuances, such as the nature of the relationship between replier and author, i.e., their frequency of interaction.

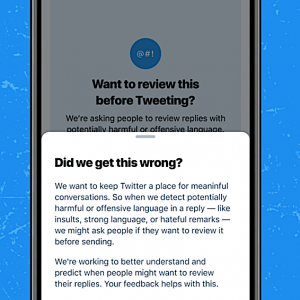

What’s more, the update prompts will grant users more options and freedom to obtain information, context and provide feedback to Twitter about the warning; the company is striving for a more meticulous, accommodating and respectful service.

A defined by Twitter:

“In early tests, people were sometimes prompted unnecessarily because the algorithms powering the prompts struggled to capture the nuance in many conversations and often didn’t differentiate between potentially offensive language, sarcasm, and friendly banter. Throughout the experiment process, we analysed results, collected feedback from the public, and worked to address our errors, including detection inconsistencies.”

Twitter discovered that 34% of users in the initial test for the updated prompts ended up deleting their initial reply or decided not to post at all. Moreover, 11%, on average, of users posted less offensive replies in future having been shown the alert.

A study led by Facebook revealed that fundamental misinterpretation plays a crucial role in the creation of angst; users will often misinterpret a response and therefore spark up conflict.

Twitter’s overarching aim is to reduce toxicity on the platform, fostering a more safe space for users to interact with one another.

It’s a given that the company has a way to go before it eradicates all toxicity within the Twitterverse. That being said, this more advanced system is certainly a step in the right direction.

Finally, for our previous #SocialShort, click here.