Facebook and TikTok Introduce New Methods of Information Regulation

Social media giants are taking even further steps to stop the dissemination of dis/misinformation online.

During the US Presidential election, Facebook and Twitter began flagging misleading posts with a label stating “this post is sharing inaccurate information” to curb the circulation of false claims. Then, social media platforms extended these alerts to information about the COVID-19 vaccination and other contentious subject matters.

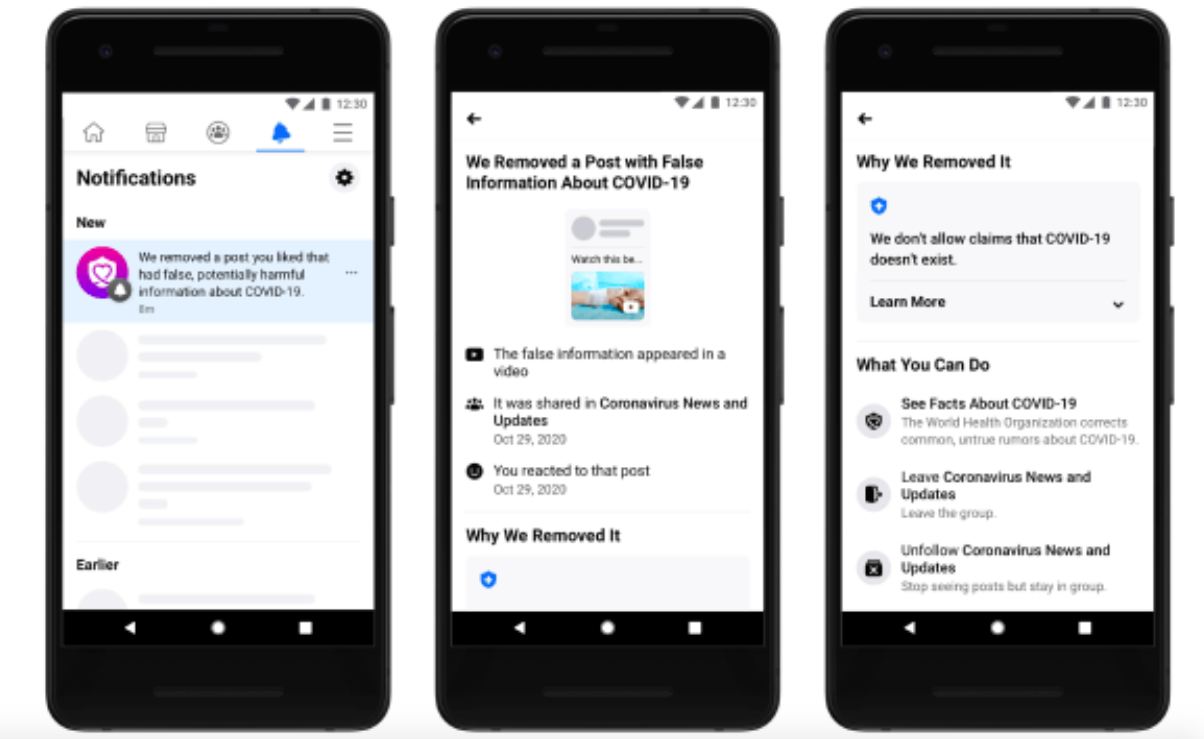

Now, Facebook will be sending out targeted notifications to those who’ve engaged with a post containing misleading or imprecise information. Many of these notifications will be related to COVID-19 and have been crafted to more clearly communicate their purpose. For example, a notification might pop up saying, “We removed a post you liked that had false, potentially harmful information about COVID-19,” along with details on the removal and an elucidation of why the content was removed.

Facebook decided to release these new and improved notifications following recent research, which revealed that the original the misinformation labelling hadn’t been as effective as had been hoped.

Platformer reported on an interview study, stating “eight of 15 participants said that platforms have a responsibility to label misinformation and we’re glad to see it. The remaining seven took a hostile attitude towards labelling, viewing the practice as “judgemental, paternalistic and against the platform ethos.” One participant even said, “I thought the manipulated media label meant to tell me the media is manipulating me.”

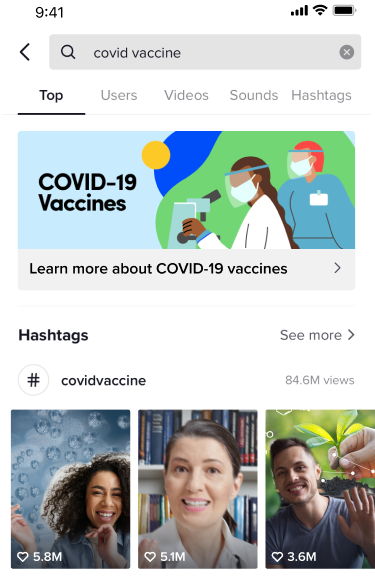

TikTok has previously limited the spread of falsehoods on their popular video-streaming app by introducing an in-app notice, so that users searching for hashtags related to the pandemic would be directed to the World Health Organisation’s website and the British Red Cross. It also partnered with trusted sources to introduce an information hub in-app, giving the TikTok community access to accurate information.

TikTok are now furthering their work by taking the following steps:

- Promoting authoritative information – “We are updating our information hub in-app so that when people search for vaccine information in-app, they will be directed to trusted information about the vaccine from respected experts. This will begin to roll out globally from 17 December.”

- Supporting our moderation teams – “Our moderation teams continue to do critical work to keep harmful content off TikTok. We also recognise that this is a difficult job, and our reviewers are human meaning they occasionally make mistakes. To minimise the chance that mistakes happen at such a crucial time for public health, we have been holding additional training sessions with our moderation teams.”

- Partnering with industry experts – “To further aid our efforts to identify and reduce the spread of misinformation, we are continuing our work with third-party fact-checking organisations. We also work with outside experts to understand what kind of misinformation trends are occurring on other platforms. By being alert to content trends outside of TikTok, our teams can issue guidance to our moderation teams to help them more easily spot and take action on violating content.”

Let’s hope that these social media companies’ polished methods of information regulation will prove to be effective in the upcoming months.

Finally, for our previous #SocialShort, click here.